The extraction of data from personal documents is a standard step in the KYC (Know Your Customer) process. Building a system that automates the extraction of data from personal documents offers strengths such as enhanced accuracy, improved efficiency, and cost-effectiveness. At Aircash, we have successfully built our in-house scalable personal document data extraction, which is one component of our in-house video verification solution.

Scalability

The scalability of a personal document data extraction system primarily depends on the characteristics of the problem domain. In each country, there are typically multiple types of personal documents, including identity cards, passports, driving licenses, and residence-related documents. Each personal document type may have multiple versions. Furthermore, there is a challenge in dealing with different languages across countries. In addition to challenges within the domain, there are technical issues to consider. Some personal documents have only one side, whereas the majority have two sides. Moreover, captured images of personal documents can be in arbitrary orientations. Finally, future maintenance and the addition of new documents to the system should require minimal effort.

Aircash Solution

At Aircash, we have managed to build a scalable solution using a combination of robust deep learning models, heuristics, and external providers for certain components. We add new documents as we expand our business, currently supporting documents in the following 11 countries: Austria, Bulgaria, Croatia, Cyprus, Germany, Greece, Italy, Poland, Romania, Slovenia, and Spain. The process of adding a new document to the system takes approximately 30 minutes with a single person working on it, and it can be done on the fly without the need for a system restart. The average accuracy of the system across all countries and documents is 92%, with an average processing time of 1.2 seconds per document. All processing is currently done on the CPU, ensuring cost-effectiveness.

The solution is divided into two parts. The first part is responsible for detecting and recognizing document type, country, version, side, and orientation in captured images. After processing all captured images and successfully detecting and recognizing documents, the second part includes optical character recognition (OCR) and extracting information from the required fields (parsing), such as name, surname, PIN, date of birth, and more.

Detection and Recognition

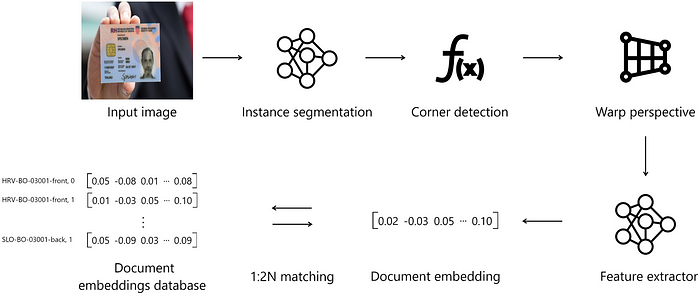

The detection and recognition part involves two lightweight deep learning models (instance segmentation and feature extractor), a custom corner detection heuristic, warp perspective affine transformation, a database for document side embeddings, and a 1:2N matching of document embeddings, where N represents the number of supported document sides.

The input image is fed into a state-of-the-art deep learning instance segmentation model, which outputs the binary mask of each document in the image. The instance segmentation model is trained on a diverse set of document types, sides, and orientations to ensure robustness and support for new documents. Once the binary mask of the document is obtained, the next step involves applying custom corner detection. This heuristic solves the problem of quadrilateral corner detection in the binary mask. Even though it might seem like an easy task, imperfections in the binary mask make the problem much more challenging. We had to develop a custom corner detection solution to ensure the robustness and accuracy of the entire pipeline. The next step is the warp perspective affine transformation of the original image with respect to the obtained corners. With this transformation, we remove the background and obtain a rectangle representing the document. This is crucial because it effectively reduces the dimensionality of the problem, especially for the later processing steps in the pipeline. The warped document is then fed into the state-of-the-art deep learning document feature extractor model, which outputs an n-dimensional embedding of the document. The feature extractor model is trained using a state-of-the-art metric learning technique on a diverse set of document types, sides, and orientations. The document embeddings database is prepopulated with the supported document sides and corresponding embeddings. The final recognition is done with 1:2N matching, where the most similar document side from the database is the solution (if the cosine similarity is above the predefined threshold). We have 2N document embeddings in the database, as each document side has 2 embeddings, one with normal orientation, and the other with a flipped orientation, enabling us to effectively determine the orientation. Recognized and warped document sides are sent to the OCR and parsing process.

OCR and Parsing

The OCR and parsing process involves an Azure or Google OCR component, a document parsing heuristic, a database for storing document configuration templates, and a data validation component.

Warped document sides are concatenated and then sent to one of the OCR providers, either Google or Azure. The provider decision depends on multiple variables, with the most crucial being the language of the recognized document sides. For example, at the time of writing, Google supports Greek, but Azure does not. Therefore, if the recognized document is a Greek document, we send it to Google. The response from the OCR provider is separated to match each warped document side. Once document sides with recognized document versions, sides, and texts are obtained, the next step is document parsing. Document parsing is template-based, where target field locations for each document and its sides have to be defined. Additionally, the parsing heuristic can benefit from the type of target fields, such as date, number, etc., and all that information, along with the relative locations of target fields, is stored in a database for document config templates. The correct selection of the template from the database is based on the recognized versions of the document sides. The last step of the pipeline is the validation of the parsed fields. In this step, multiple checks are performed, such as MRZ cross-check, data types, etc.

Addition of new document

There are two steps to add support for a new document in the system:

- Two document embeddings (normal and flipped orientation) of each side of the document have to be added to the document embeddings database at the detection and recognition part. Document embeddings are generated using the same deep learning feature extractor. The model is already available, and the only task is to prepare images of each side of the document, which can typically be found on Prado.

- Config template of the document has to be added to a database for document config templates. Creating a template of the document takes most of the time in the addition process because all information for all the required fields has to be included. We built a simple user-friendly interface program that simplifies the process of template creation.

All this changes can be done on the fly, which means that the system doesn’t have to be restarted, and on average, the entire process takes 30 minutes.

Alternatives

Our solution is very specific, and each component is carefully developed to ensure the robustness of the entire pipeline and maximum accuracy. In recent research, there are many approaches that propose end-to-end solutions, meaning that a single model can predict the target fields, for example, the Pix2Struct model. The problem with those approaches is that they require a lot of labeled training data. Moreover, the addition of a new document most probably requires model retraining for optimal results. Our approach requires significantly less labeled training data for both models and doesn’t require retraining.

Apart from end-to-end solutions, there are different approaches to some of the components or combinations of components. For example, detecting the corners of the document can be performed in multiple ways, such as through edge detection and then finding the intersection of the edges. In our experiments, our approach turned out to be superior. There is an approach to replace document parsing heuristic with a machine learning model, which effectively does object detection of target fields, called visual parsing. The pro to this approach is that we make it exclusively a data problem. The con is that model retraining and data labelling should be done for each new document. As we don’t have a lot of available training data, the template-based method was superior in our case.

Conclusion

Building a scalable personal document data extraction is a complex task. Depending on the business use case and available resources, mostly data resources, there are multiple approaches. Our in-house solution enabled us to significantly speed up the user onboarding process, but even more importantly, it provided us with a foundation to build upon. Personal document forgery is becoming increasingly popular, and our approach to mitigate and detect those attempts is surely one of the backbones for secure financial transactions. In the next topic, we will go into more detail on those approaches.

English

English Deutsch

Deutsch Hrvatski

Hrvatski Ελληνικά

Ελληνικά Română

Română Slovenščina

Slovenščina Čeština

Čeština Polski

Polski Slovenčina

Slovenčina Español

Español Italiano

Italiano български

български